cargese.io

DAY1: TUESDAY 21

9-12.15: Marc Mezard Statistical physics of inference

14-17.15: Andrea Montanari Lecture notes on two-layer neural networks

18.30: Welcome drink @ the institute

DAY2: WEDNESDAY 22

9-12.15: Alexander Tkatchenko: Bringing Atomistic Modeling in Chemistry and Physics and Machine Learning Together: Part 1 and Part 2.

14-14.45: Guilhem Semerjian Phase transitions in inference problems on sparse random graphs

14.45-15.30: Federico Ricci-Tersenghi Belief Propagation and Monte Carlo based algorithms to solve inference problems on sparse random graphs

15.45-16.30: Afonso Bandeira Statistical estimation under group actions: the sample complexity of multi-reference alignment

16.30-17.15: Leo Miolane Phase transitions in Generalized Linear Models

DAY3: THURSDAY 23

9-12.15: Dmitry Panchenko: Introductory lectures by Dmitry can be found online Lecture 1 and Lecture 2.

14-14.45: Will Perkins: Bethe states of random factor graphs

14.45-15.30: Alice Guillonnet

15.45-16.30: Christina Lee Yu: Iterative Collaborative Filtering for Sparse Matrix Estimation

16.30-17.15: Quentin Berthet Computational aspects in Statistics:Sparse PCA & Ising blockmodel

18.30: Boat trip in Cargese Harbor

DAY4: FRIDAY 24

9-12.15: Nicolas Brunel Learning and memory in recurrent neural networks

14-14.45: Remi Monasson

14.45-15.30: David Schwab

15.30-16.15: Surya Ganguli Theories of deep learning: generalization, expressivity, and training

18.00 - 20.00: EVENING POSTER SESSION I

DAY5: SATURDAY 25

9-12.15: Gérard Ben Arous

14-14.45: Jean Barbier The adaptive interpolation method for the Wigner spike model

14.45-15.30: Ahmed El Alaoui Detection limits in the spiked Wigner model

15.45-16.30: Aukosh Jagganath

16.30-17.15: Marc Lelarge Unsupervised learning:symmetric low-rank matrix estimation,community detection and triplet loss.

DAY6: MONDAY 27

9-12.15: Giulio Biroli Glassy Dynamics in Physics & Beyond

14-17.15: Yann Lecun Deep Learning: Past, Present and Future

DAY7: TUESDAY 28

9-12.15: Sundeep Rangan Approximate Message Passing Tutorial

14-14.45: Cynthia Rush Finite Sample Analysis of AMP

14.45-15.30: PierFrancesco Urbani

15.45-16.30: Galeen Reeves

16.30-17.15: Yoshiyuki Kabashima A statistical mechanics approach to de-biasing and uncertainty estimation in LASSO

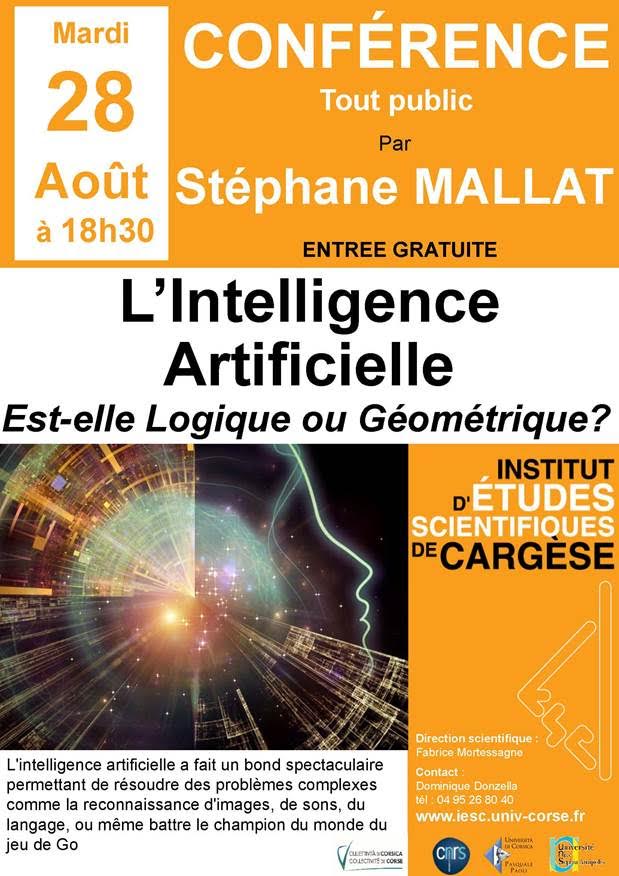

Evending Conference Grand Public by Stephane Mallat

DAY8: WEDNESDAY 29

9-12.15: Naftali Tishby The Information Theory of Deep Learning: What do the layers represent?

14-14.45: Marylou Gabrie Entropy and mutual information in models of deep neural networks

14.45-15.30: Chiara Cammarota

15.30-16.15: Matthieu Wyart Loss landscape in deep learning: Role of a “Jamming” transition

18.00 - 20.00: EVENING POSTER SESSION II

DAY9: THRUSDAY 30

9-12.15: Stephane Mallat

14-14.45: Soledad VilarOptimization and learning techniques for clustering problems

14.45-15.30: Samuel SchoenholzPRIORS FOR DEEP INFINITE NETWORKS

15.30-16.15: Francesco ZamponiRandom Close Packing vs SAT-UNSAT: a short note

DAY10: FRIDAY 31

9-12.15: Riccardo Zecchina

14-14.45: Levent SagunAn empirical look at the loss landscape

14.45-15.30: Jean-Philippe BouchaudEigenvector Overlaps

15.30-16.15: Giorgio Parisi

Details: Poster session I (Friday 24)

Samy Jelassi: Smoothed analysis of the low-rank approach for smooth semidefinite programs

Chris Metzler: Unsupervised Learning with Stein’s Unbiased Risk Estimator

Marino Raffaele: Revisiting the challenges of MaxClique

Gabriele Sicuro: The fractional matching problem

Dmitriy (Tim) Kunisky: Tight frames, quantum information, and degree 4 sum-of-squares over the hypercube

Benjamin Aubin: Storage capacity in symmetric binary perceptrons

Sebastian Goldt: Stochastic Thermodynamics of Learning

Christian Schmidt: Estimating symmetric matrices with extensive rank

Adrian Kosowski: Ergodic Effects in Token Circulation

Chan Chun Lam: Adaptive interpolation scheme for inference problems with sparse underlying factor graph

Inbar Seroussi: Phase Transitions in Stochastic Diffusion on a General Network

Jonathan Dong: Optical realization of Echo-State Networks with light-scattering materials

Mihai Nica: Universality of log-normal distribution for randomly initialized neural nets

Andrey Lokhov: Understanding the nature of quantum annealers with statistical learning.

Eric De Giuli: Random language model – a path to structured complexity

Grant Rotskoff: Neural networks as interacting particle systems

Details: Poster session II (Wednesday 29)

Endre Csóka: Local algorithms on random graphs and graph limits

Clément Luneau: Entropy of Multilayer Generalized Linear Models: proof of the replica formula with the adaptive interpolation method

Pan Zhang: Unsupervised Generative Modeling Using Matrix Product States

Andre Manoel: Approximate Message-Passing for Convex Optimization with Non-Separable Penalties

Joris Guerin: Improving Image Clustering With Multiple Pretrained CNN Feature Extractors

Ada Altieri: Constraint satisfaction mechanisms for marginal stability in large ecosystems

Federica Gerace: From statistical inference to a differential learning rule for stochastic neural networks.

Neha Wadia: In Search of Critical Points on Deep Net Optimization Landscapes

Luca Saglietti: Role of synaptic stochasticity in training low-precision neural networks

Carlo Lucibello: Limits of the MAP estimator in the phase retrieval problem

Satoshi Takabe: Trainable ISTA for Sparse Signal Recovery

Antoine Maillard: The committee machine: Computational to statistical gaps in learning a two-layers neural network

Stefano Sarao: Performance of Langevin dynamics in high dimensional inference

Aurélien Decelle: Thermodynamics properties of restricted boltzmann machines

Beatriz Seoane Bartolomé: Can a neural network learn a gauge symmetry?

Alia Abbara: Universal transitions in noiseless compressed sensing and phase retrieval

Tomoyuki Obuchi: Accelerating Cross-Validation in Multinomial Logistic Regression with L1-Regularization

Announcement:

You enjoy the school ? We organizers (Florent Krzakala and Lenka Zdeborova) are looking for postdocs on these topics. Come talk to us during the conference!

Twitter feed:

Tweet #cargese2018 More information on the institute webpage